The race is on build a ‘universal’ quantum computer. Such a device could be programmed to speedily solve problems that classical computers cannot crack, potentially revolutionizing fields from pharmaceuticals to cryptography. Many of the world’s major technology firms are taking on the challenge, but Microsoft has opted for a more tortuous route than its rivals.

IBM, Google and a number of academic labs have chosen relatively mature hardware, such as loops of superconducting wire, to make quantum bits (qubits). These are the building blocks of a quantum computer: they power its speedy calculations thanks to their ability to be in a mixture (or superposition) of ‘on’ and ‘off’ states at the same time.

Microsoft, however, is hoping to encode its qubits in a kind of quasiparticle: a particle-like object that emerges from the interactions inside matter. Some physicists are not even sure that the particular quasiparticles Microsoft are working with — called non-abelian anyons — actually exist. But the firm hopes to exploit their topological properties, which make quantum states extremely robust to outside interference, to build what are called topological quantum computers. Early theoretical work on topological states of matter won three physicists the Nobel Prize in Physics on 4 October.

The firm has been developing topological quantum computing for more than a decade and today has researchers writing software for future machines, and working with academic laboratories to craft devices. Alex Bocharov, a mathematician and computer scientist who is part of Microsoft Research’s Quantum Architectures and Computation group in Redmond, Washington, spoke to Nature about the company’s work.

Alex Bocharov

Alex Bocharov works on topological quantum computing at Microsoft Research.

How did Microsoft end up focusing on perhaps the most difficult quantum-computing hardware of all — topological qubits?

We’re people-centric, rather than problem-centric. And quantum-computing dignitaries, such as Alexei Kitaev, Daniel Gottesman and, most notably, Michael Freedman [the Fields Medal-winning director of Microsoft’s Station Q research lab], spearheaded the growth of our quantum-computing groups. So it was Freedman’s own trailblazing vision about how to do things that we followed.

Both IBM and Google use superconducting loops as their qubits. What are the qubits you are trying to harness?

Our qubits are not even material things. But then again, the elementary particles that physicists run in their colliders are not really solid material objects. Here we have non-abelian anyons, which are even fuzzier than normal particles. They are quasiparticles. The most studied kinds of anyon emerge from chains of very cold electrons that are confined at the edge of a 2D surface. These anyons act like both an electron and its antimatter counterpart at the same time, and appear as dense peaks of conductance at each end of the chain. You can measure them with high-precision devices, but not see them under any microscope.

Anyon-like particles were first predicted as stand-alone objects in 1937, and Kitaev suggested that quasiparticle versions could be used in quantum computers in 19971. But it was only in 2012 that physicists first claimed to have spotted them. Are you even certain that they exist?

We are pretty sure that the simplest species do exist. These were observed, we think, in 2012 by Leo Kouwenhoven at the Delft University of Technology in the Netherlands2. I wouldn’t say there is 100% consensus on that, but Kouwenhoven’s observation has been reproduced in various other labs3. It doesn’t really matter what exactly these excitations are, as long as they are measurable, and they can be used to perform calculations. Now it has come to a point where labs are putting together some very sophisticated equipment to produce those excitations in quantities and to try to start doing calculations.

Developing anyons seems very difficult. What are the advantages of using anyons rather than other kinds of qubit?

In most quantum systems, information is encoded in the properties of particles, and the slightest interaction with their surroundings will destroy their quantum state. This means they operate with a precision of maybe 99.9%, or what we call three nines. To do real problems, we need precision at the level of ten nines, so you need to create a massive array of qubits that allows you to correct for the errors. Topological quantum computing has the promise of reaching up to six or seven nines, which means we wouldn’t need to have this extensive and expensive error correction.

What about topological quantum computing makes it so robust?

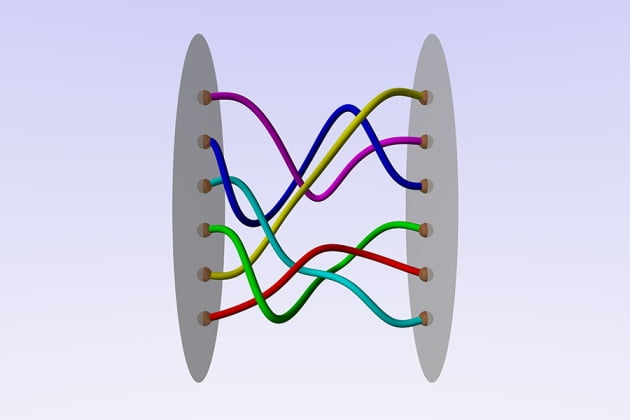

Noise from the environment and other parts of the computer is inevitable, and that might cause the intensity and location of the quasiparticle to fluctuate. But that’s OK, because we do not encode information into the quasiparticle itself, but in the order in which we swap positions of the anyons. We call that braiding, because if you draw out a sequence of swaps between neighbouring pairs of anyons in space and time, the lines that they trace look braided. The information is encoded in a ‘topological’ property — that is, a collective property of the system that only changes with macroscopic movements, not small fluctuations.

Microsoft has been working on topological quantum computing for more than a decade, for most of which the necessary qubits were hypothetical. Why are you playing such a long game?

It’s a game worth playing, because the upside is enormous and there is practically no downside. Microsoft is a very affluent company; it sits on something like US$100 billion in cash. So what else one would you invest in? Bill Gates is also investing in other things — to eradicate malaria and HIV — that might require quantum computing at some point. Genomics, for example, so far has been done on classical computers and there is a possibility that some huge progress could be made with something like 100–200 qubits on a quantum computer.

How many people does Microsoft have working on quantum computing, and how much are you spending?

A rough number I would say is 35–40 people, but I don’t think I’m at liberty to speak about the amount of money, even in terms of rough estimates.

Your team has been working on developing software for this kind of quantum computer. What have you been up to?

So far, we’ve had an amazing ride in terms of creating more-efficient algorithms — reducing the number of qubit interactions, known as gates, that you need to run certain computations that are impossible on classical computers. In the early 2000s, for example, people thought it would take about 24 billion years to calculate on a quantum computer the energy levels of ferredoxin, which plants use in photosynthesis. Now, through a combination of theory, practice, engineering and simulation, the most optimistic estimates suggest that it may take around an hour. We are continuing to work on these problems, and gradually switching towards more applied work, looking towards quantum chemistry, quantum genomics and things that might be done on a small-to-medium-sized quantum computer.

Isn’t this jumping the gun, given that a working quantum computer that could handle such questions is still perhaps a decade away?

In the past, the question was always whether something was a problem where a quantum computer would be hypothetically better than a classical computer. Now we want to figure out, not just is it doable, but how doable is it? We need to move a lot of earth to figure that out, but it’s worth it, because we believe this is going to be a whole industry in itself.

[Source:-Nature]