\

Facebook announced an expansion of several initiatives Thursday to combat the spread of misinformation on the social network used by more than 2 billion people.

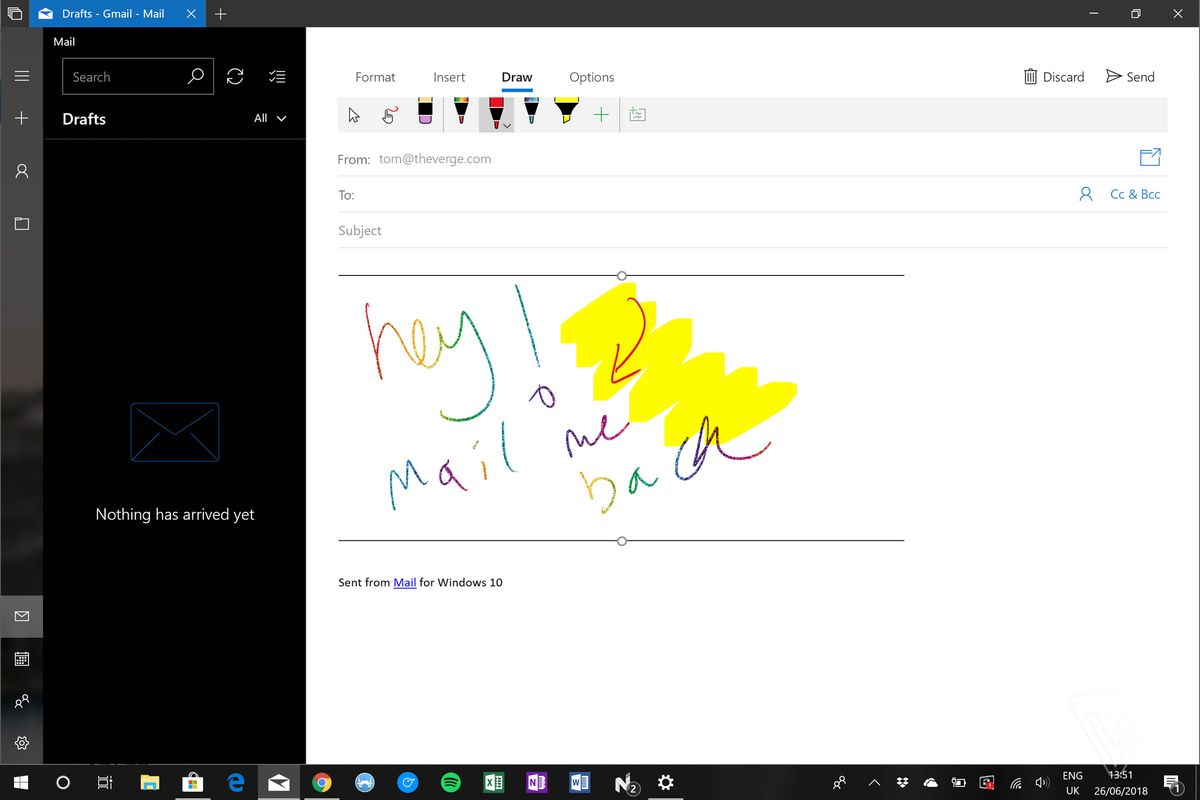

In a company blog, Facebook acknowledged that fake news reports and doctored content have increasingly become image-based in some countries, making it harder for readers to discern whether a photo or video related to a news event is authentic. The company said it has expanded its fact-checking of traditional links posted on Facebook to photos and videos. Partnering with third-party experts trained in visual verification, the company will also flag images that have been posted on Facebook in a misleading context, such as, for example, a photo of a previous natural disaster or shooting that is displayed as a present-day event.

Facebook will also use machine-learning tools to identify duplicates of debunked stories that continue to pop up on the network. The company said that more than a billion pictures, links, videos and messages are uploaded to the social platform every day, making fact-checking difficult to execute by human review. The automated tools will help the company find domains and links that are spreading the same claims that have already been proved false. Facebook has said it will use AI to limit misinformation, but the latest update applies to finding duplicates of false claims.

Earlier this year, Facebook said it would start a new project to help provide independent research on social media’s role in elections and within democracies. The commission in charge of the elections research is hiring staff to run the initiative, will launch a website in the coming weeks and will request research proposals on the scale and effects of misinformation on Facebook, the social network said. “Over time, this externally-validated research will help keep us accountable and track our progress,” Facebook said.

The other updates announced Thursday include using machine learning to identify repeat offenders of misinformation and expanding Facebook’s fact-checking partnerships internationally.

Mike Ananny, a communications professor at the University of Southern California, said the updates are a step in the right direction but that Facebook has not fully explained what it’s doing to combat fake news or shared details about how its human-led and automated detection systems actually work. Ananny suggested that Facebook share the algorithms used by its machine learning systems, what data those systems are trained on, and if systemic errors have been identified within them.

“Facebook is on this complicated journey of trying to figure out what its responsibility is to journalism and to the public,” he said. But it’s not clear to him how the company defines success in these efforts, which may help the company evade accountability.

The latest announcement is part of Facebook’s efforts, which have spanned more than a year and a half, to grapple with fake accounts, disinformation and accountability on the network. “This effort will never be finished, and we have a lot more to do,” Facebook said.

Last year, the company turned over to Congress more than 3,000 Facebook ads created by Russian operatives to exploit cultural divisions in the United States and influence the 2016 presidential election. The Russian campaign, and the broader issue of false news stories and hoaxes, raised lingering questions about the company’s role in vetting information.

In recent months, Facebook and its chief executive, Mark Zuckerberg, have also come under intense criticism over data privacy. The now-shuttered political consultancy Cambridge Analytica improperly accessed millions of Facebook users’ personal information, reports revealed earlier this year. Since then, Facebook has endured several rounds of questioning by lawmakers in Europe and the United States. The company has pledged greater transparency in its handling of user data.

[“Source-gadgets.ndtv”]